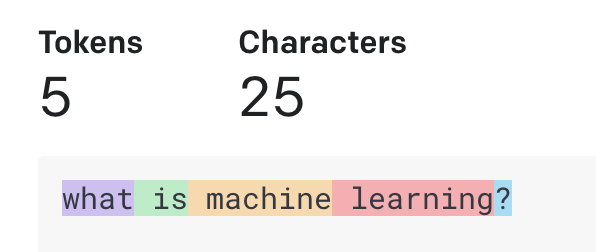

Tokenization in NLP

Tokenization is one of the most fundamental concepts in natural language processing (NLP). It involves breaking down text into smaller units, or tokens, that are easier for computers to understand. While it may seem simple on the surface, effective tokenization is crucial for building accurate NLP models. In this comprehensive