The Expanding Universe of Large Language Models

Large language models(LLM) have become quite a phenomenon in the last year! In this post, we will cover some of the popular LLMs. I will continue to update this LLM list as more LLMs(both closed and open source) are released over the year.

We will mainly categorize LLMs into closed-source and open-source.

Closed Source LLMs

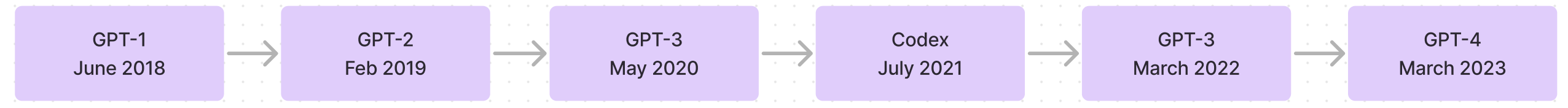

- GPT-4: GPT-4 is the largest and most advanced large language model developed by OpenAI. GPT-4 has almost ~15% improved performance across different benchmarks as released by OpenAI. This model was released in 2023.

- GPT-3: Another version of the model created by OpenAI, with less complexity than GPT-4. GPT-3 was released by OpenAI in 2020. GPT-3 is a decoder-only model which uses attention-based techniques for modeling.

- Claude: An LLM developed by Anothropic, Claude is known for its sophisticated design.

- Gopher: This is a product of DeepMind's research and development efforts.

- Chinchilla: Google's team is responsible for this model's development.

- LaMDA: Also developed by Google, LaMDA is unique for its conversational capabilities.

- AlexaTM: This is Amazon's proprietary language model used in the Alexa virtual assistant.

- Cohere: Developed by Cohere, this model stands out for its specific applications.

- Bloomberg GPT: Bloomberg's custom-tailored LLM, further details can be found in their research paper here.

Open Source LLMs

- falcon-40b-instruct

- guanaco-65b-merged

- 30B-Lazarus

- LLaMA Model

- LLaMA 2: https://ai.meta.com/llama/

- Free Willy 2: https://stability.ai/blog/freewilly-large-instruction-fine-tuned-models

- Persimmon-8B: Persimmon-8B is an open-source model from Adept AI

- Wizard LM: Family of LM trained for different tasks. Some of the LLMs include WizardCoder-Python-34B-V1.0 and WizardMath-70B-V1.0.

- Phi-1: This is a smaller LM with 1B parameters from Microsoft.

- Mistral-7b: This is 7B parameter model from Mistral

- TigerBot 70B Base: This is a 70B parameter model from TigerResearch.

- Mixtral MOE Model: Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights.

There are many other open-source model listed here at HuggingFace leaderboard.