Tokenization in NLP

Tokenization is one of the most fundamental concepts in natural language processing (NLP). It involves breaking down text into smaller units, or tokens, that are easier for computers to understand. While it may seem simple on the surface, effective tokenization is crucial for building accurate NLP models. In this comprehensive guide, we'll cover everything you need to know about tokenization in NLP.

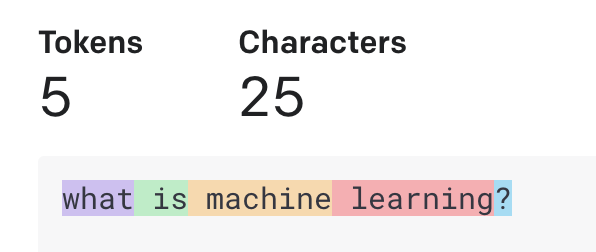

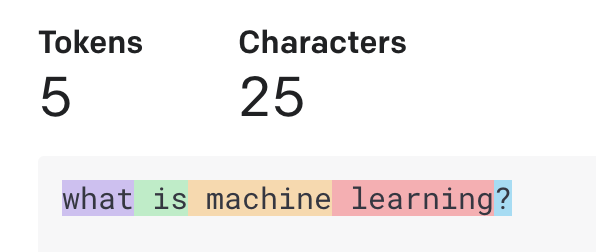

Example of how text looks after tokenization

Section 1: What is Tokenization in NLP?

Tokenization refers to the process of splitting text into smaller chunks called tokens. In NLP, tokens usually correspond to words, phrases, or sentences. The goal of tokenization is to break down long strings of text into smaller units that are meaningful to the application.

For example, consider the following sentence:

"The quick brown fox jumps over the lazy dog"

A tokenizer would split this into the following tokens:

["The", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog"]

Tokenization transforms the raw text into a format that is easier for NLP algorithms to understand. It is typically one of the first steps in any NLP pipeline. Effective tokenization is key for tasks like part-of-speech tagging, named entity recognition, and sentiment analysis.

Section 2: Types of Tokenization

There are several different types of tokenization in NLP:

- Word Tokenization: This splits text into individual words. It involves identifying where word boundaries start and end, which can be challenging in some languages. Word tokenization is used for many NLP tasks.

- Sentence Tokenization: This splits text into individual sentences. It involves finding sentence boundaries and separating the text. Sentence tokenization is important for tasks like summarization and machine translation.

- Subword Tokenization: This splits words into smaller subword units, like prefixes, suffixes, and word stems. This helps handle rare or unknown words. Subword tokenization is commonly used in neural network NLP models.

Section 3: Tokenization Methods

Some common methods for implementing tokenization include:

- White Space Tokenization: This splits text on white spaces and punctuation. It's a simple baseline approach but falls short for more complex cases.

- Regular Expression Tokenization: This uses regex patterns to match and split tokens. More complex rules can be implemented to handle issues like punctuation and contraction cases.

- NLTK Tokenization: The Natural Language Toolkit Python library contains tokenizer implementations for sentence and word tokenization.

- SpaCy Tokenization: SpaCy is another Python NLP library with built-in tokenizers. It offers rule-based and statistical tokenizers.

In Python, you can do word tokenization using various libraries like NLTK (Natural Language Toolkit), spaCy, and even Python's standard library str.split() method (though the latter is quite rudimentary). Below are examples using different methods.

text = "Hello, world! How are you?"

tokens = text.split()

print(tokens)

# output ['Hello,', 'world!', 'How', 'are', 'you?']This following example shows how to use NLTK to get tokens.

import nltk

nltk.download('punkt')

from nltk.tokenize import word_tokenize

text = "Hello, world! How are you?"

tokens = word_tokenize(text)

print(tokens)

['Hello', ',', 'world', '!', 'How', 'are', 'you', '?']

spaCy is another powerful library for NLP tasks. First install spaCy and download the language model

import spacy

nlp = spacy.load("en_core_web_sm")

text = "Hello, world! How are you?"

doc = nlp(text)

tokens = [token.text for token in doc]

print(tokens)

['Hello', ',', 'world', '!', 'How', 'are', 'you', '?']

Section 4: Best Practices for Tokenization

Here are some key best practices to keep in mind when implementing and evaluating tokenization:

- Consistency: The tokenizer should be consistent in how it handles edge cases. Inconsistencies can negatively impact downstream models.

2. Domain-Specific: Tokenization should be tailored for the domain. Models trained on news may tokenization differently than social media text, for example.

3. Handle Edge Cases: Things like punctuation, contractions, hyphenation, and more should be considered when designing tokenization rules.

4. Evaluation: Tokenizers should be rigorously evaluated using metrics like accuracy and consistency. The human evaluation also helps catch errors.

Tokenization is a critical first step in any NLP pipeline. It transforms raw text into a format that machines can easily process. While it may seem trivial, proper tokenization is vital for training accurate models. Carefully thinking through the types of tokenization, methods, and best practices outlined in this guide will lead to more robust NLP systems.

Meta Description: This in-depth guide covers what tokenization is, why it's important in NLP, different types of tokenization, and best practices for implementing tokenization.

References

- SentencePiece: A simple and language independent subword tokenizer and detokenizer for Neural Text Processing https://arxiv.org/abs/1808.06226