What is Reinforcement Learning from Human Feedback?

Reinforcement learning from human feedback (RLHF) trains AI systems to generate text or take actions that align with human preferences. RLHF has become one of the central methods for fine-tuning large language models. In particular, RLHF was a key component for training GPT-4, Claude, Bard, and LLaMA-2 chat models. RLHF enables LLMs to go beyond the distribution of training data to achieve higher ratings from humans.

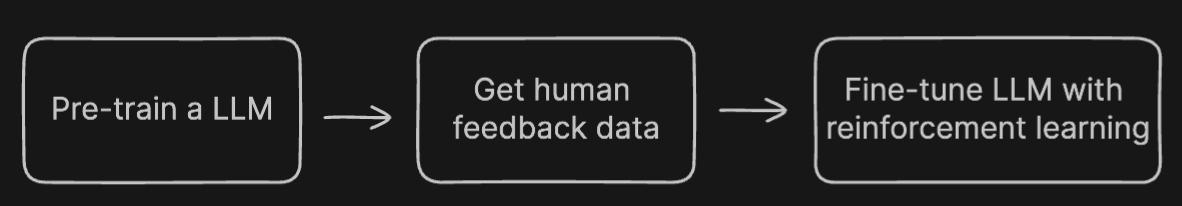

The key steps are:

- Start with a pre-trained language model (LM). Popular LMs like GPT-3 or Anthropic's Claude can be used off-the-shelf or fine-tuned further.

- Gather human feedback data. Humans provide examples of good and bad text or action sequences. This trains a reward model to estimate how much humans want new text/actions.

- Use reinforcement learning to fine-tune the LM to maximize rewards from the reward model, i.e. to generate text/actions that humans would rate highly.

The design choices around which LM architecture to start, how much data to collect, and details of the reinforcement learning process lead to a complex training procedure with many tunable parameters. There is still much research needed to determine best practices.

The key ideas are: pretrained LMs capture a lot of linguistic knowledge, reward models integrate human preferences, and reinforcement learning optimizes the LM for high rewards. Combining these allows RLHF to produce outputs that satisfy human preferences without requiring extensive supervised datasets.

Reward Model

The goal of the reward model (RM) is to score text responses to a given prompt, aligning with human preferences. The key challenge is obtaining reliable training data, as human scoring can be inconsistent.

Instead of direct scores, the training data consists of comparisons - tuples of (prompt, winning response, losing response). This indicates the winning response is preferred over the losing one, without needing absolute scores.

The model is trained to maximize the score difference between winning and losing responses for the same prompt. This can be framed as a regression or ranking objective.

The reward model is often initialized from the same architecture as the language model, as it benefits from understanding language nuances. The goal is for the RM to reliably score LM samples, so starting from a similar level of capability helps.

In summary, the key aspects of training the reward model are:

- Using comparative data as supervision rather than absolute scores

- Maximizing differences between preferred and non-preferred responses

- Leveraging powerful model architectures, often initialized from the LM.