Introduction to Word2Vec

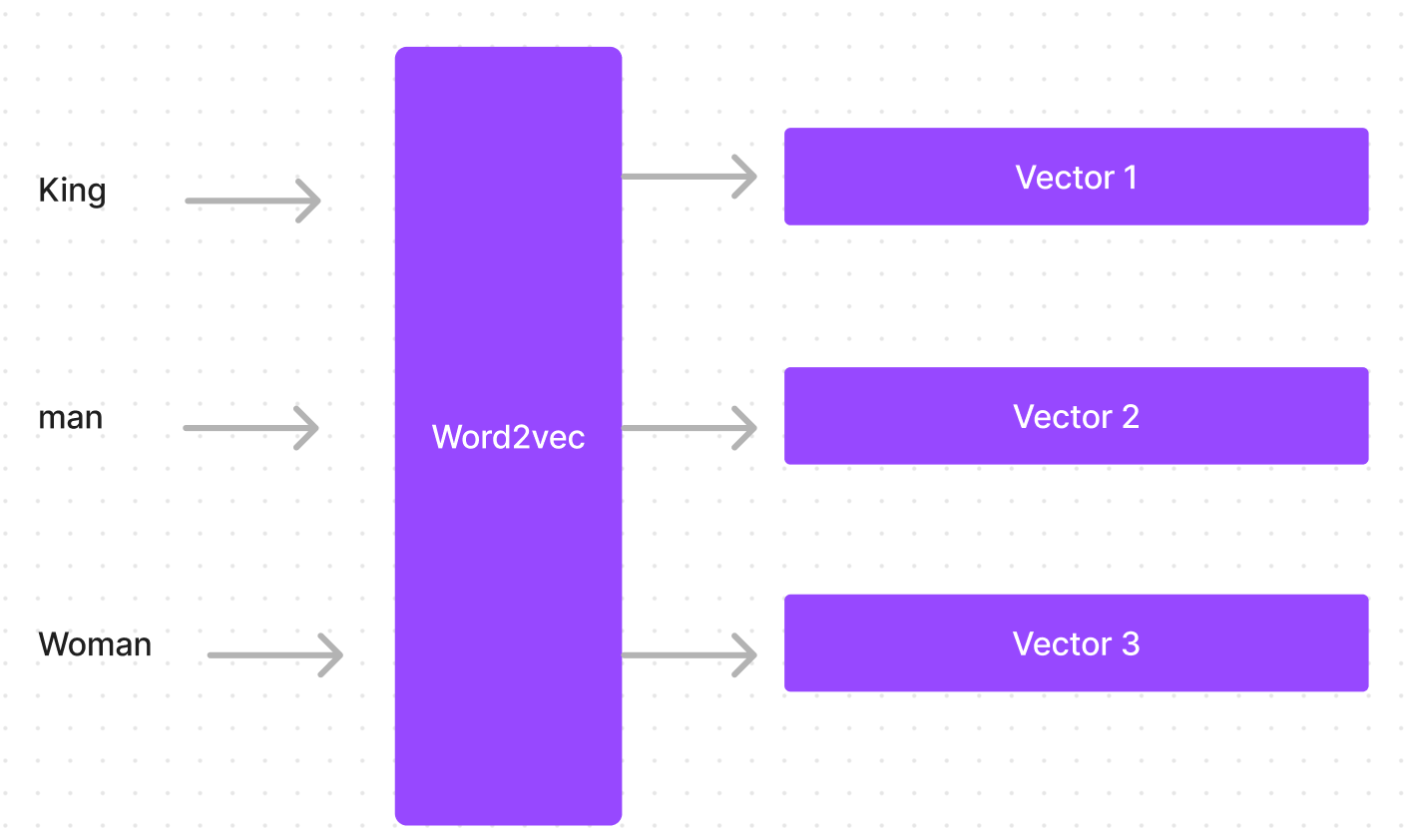

Word2vec is a popular group of related models used to produce word embeddings. Word embeddings are vector representations of words that capture semantic meaning allowing words with similar meanings to have similar representations. Word2vec models are shallow, two-layer neural networks trained on large amounts of text data to reconstruct linguistic contexts of words. After training, the models can predict surrounding words given a context, or vice versa. The neural network internally learns efficient vector representations of words that capture surprisingly many linguistic regularities and patterns.

Brief History

The word2vec models were originally created by a team of researchers led by Tomas Mikolov at Google in 2013. The work built upon prior research on neural network language models as well as work on vector space models of semantics in the early 2000s. However, word2vec popularized these techniques and made it easier to train word vectors at scale. Facebook AI Research later released extensions to word2vec in 2017 and 2018 with the FastText model that incorporates subword information.

Since its release, word2vec has been widely adopted in natural language processing and machine learning applications as an effective technique for learning semantically-meaningful word embeddings from text data. Performance improvements were demonstrated across many tasks from sentiment analysis to document classification by replacing manually-created features with word2vec embeddings. Today, most state-of-the-art natural language processing systems utilize word embeddings trained using techniques like word2vec or its GPU-accelerated successor GPT-3.

Model Architectures

There are two well-known model architectures that were introduced with word2vec for learning embeddings: the continuous bag-of-words (CBOW) model and the continuous skip-gram model.

The CBOW model is used to predict a target word from the surrounding context words. This model takes the surrounding words as input and tries to predict what would be the most likely word appearing in the middle of that context. The order of context words does not influence prediction.

On the other hand, the skip-gram model does the inverse and tries to predict a window of surrounding words given the target word in the middle as input. The skip-gram architecture weighs closer context words more heavily than more distant context words.

Both model architectures are shallow, two-layer neural networks. By training these models on large corpora, the hidden layer learns an efficient encoding of each word in a lower-dimensional vector space where semantically similar words are mapped to nearby points. These vector representations are what's output as the word embeddings.

Key Properties of Word Embeddings

The word embeddings produced by word2vec exhibit some fascinating properties derivable purely from statistics of co-occurrences in sequences of words.

Most famously, vector operations reveal semantic relationships between words. For example, simple analogies such as "man is to woman as king is to x" can be solved by the vector equation vector("king") - vector("man") + vector("woman") results in a vector closest to that of the word "queen". This works for finding verb tense and singular/plural variations as well.

Additionally, similarities between word vector meanings can be calculated by cosine distance. Words with similar semantic meanings will have high cosine similarity between their vectors. This allows identifying words strongly associated with query words.

Lastly, dimensionality reduction via PCA reveals that the word embeddings capture different types of semantic information in their axes. Often, axes roughly break down into interpretable semantic qualities like gender, plurality, part of speech, etc.

Training Word2Vec Models

Word2vec models are trained on text corpus data to reconstruct linguistic contexts of words in sentences. The training objective functions try to maximize probability of a target word (CBOW) or surrounding words (skip-gram) using the softmax function. Computationally, this becomes expensive, so tricks like hierarchical softmax and negative sampling are used to speed up training.

Many word vector sets have already been pre-trained on huge text corpora like Wikipedia, News articles, Google News data, etc. and released publicly. However, custom word vectors can also be trained if there is a large corpus (>100M words) of domain-specific text data. Specialized word vectors capture nuances of industry jargon and terminology far better.

The original word2vec implementation was in C. Today, most programmers prefer using high-level frameworks like TensorFlow, PyTorch, and GENSIM which have built-in support and pre-trained models for word2vec and other embedding techniques. These tools allow easily training on GPU/TPU accelerators to scale training across large datasets with millions of sentences.

Here we provide a simple code implementation for training Word2Vec

import torch

import torch.nn as nn

import torch.optim as optim

from collections import Counter

import numpy as np

# Define the SkipGram model

class SkipGramModel(nn.Module):

def __init__(self, vocab_size, embedding_dim):

super(SkipGramModel, self).__init__()

self.embeddings = nn.Embedding(vocab_size, embedding_dim)

self.linear = nn.Linear(embedding_dim, vocab_size)

def forward(self, context_word):

embeds = self.embeddings(context_word)

out = self.linear(embeds)

log_probs = torch.log_softmax(out, dim=1)

return log_probs

# Function to convert words to indices

def word_to_idx(word, word_to_ix):

return word_to_ix[word]

# Function to create context pairs

def make_context_pairs(text, window_size):

pairs = []

for i in range(window_size, len(text) - window_size):

context = [text[i+j] for j in range(-window_size, window_size+1) if j != 0]

target = text[i]

pairs.append((context, target))

return pairs

# Example text (list of words)

text = "This is an example text for Word2Vec using PyTorch".lower().split()

# Build vocabulary

vocab = set(text)

vocab_size = len(vocab)

# Create word to index mapping

word_to_ix = {word: i for i, word in enumerate(vocab)}

# Hyperparameters

embedding_dim = 10

learning_rate = 0.001

window_size = 2

epochs = 10

# Create model

model = SkipGramModel(vocab_size, embedding_dim)

loss_function = nn.NLLLoss()

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# Training

for epoch in range(epochs):

total_loss = 0

for context, target in make_context_pairs(text, window_size):

context_idxs = torch.tensor([word_to_idx(w, word_to_ix) for w in context], dtype=torch.long)

model.zero_grad()

log_probs = model(context_idxs)

loss = loss_function(log_probs, torch.tensor([word_to_idx(target, word_to_ix)], dtype=torch.long))

loss.backward()

optimizer.step()

total_loss += loss.item()

print(f"Epoch {epoch}: Total Loss: {total_loss}")

SkipGramModelis a simple neural network with an embedding layer and a linear layer.- The

make_context_pairsfunction generates context-target pairs based on a given window size. - The training loop iterates over these pairs, updating the model's weights to predict the target word from its context.

Example Applications

Word embeddings from word2vec and related models have enabled significant advances across many natural language processing tasks by providing a convenient way to represent semantic meaning. Below are some example applications:

Sentiment Analysis: Word vectors provide informative features for training sentiment classifiers to determine emotional polarity or intensity scores. They capture semantics useful for identifying happy, sad, angry, etc. sentiment.

Document Classification: Word vectors can model key topics and concepts. Document vectors can be created by averaging constituent word vectors. Useful for categorizing news articles, support tickets, and other documents into thematic groups.

Information Retrieval: Adding word vectors makes search algorithms like TF-IDF smarter. Queries can find relevant documents using semantic similarities instead of only matching keywords. This returns better results.

Chatbots: Bots can suggest helpful responses by checking for semantic similarity between user message and potential bot responses. Allows responding appropriately without needing strict keyword matches.

Limitations and Future Research

While revolutionary, word2vec still has some important limitations. Word embeddings are sensitive to biases in the training data which can negatively impact downstream tasks. They also struggle with representing complex word relationships that need deeper models to understand context.

Exciting areas of continued research include better handling of polysemy (multiple meanings per word), integrating morphology and multi-word units, making vectors less susceptible to unwanted biases, improved representations of rare words, and reduced memory requirements for holding huge embedding tables.

There is still much work needed to achieve human-level language understanding. However, word2vec represents an important step forward in that ultimate goal by showing the potential of unsupervised distributional semantics. The future integration of such techniques with large, contextualized language models promises further advances in the coming years.

Conclusion

In conclusion, word2vec is an influential set of shallow neural network models for learning vector representations of words from text data by predicting surrounding words. The word embeddings efficiently encode semantic meanings and relationships between terms. This provides tangible advances to algorithms designed to operate over human language data across a variety of applications. Continued evolution of word vector techniques remains an active area of natural language processing research.

References

- TensorFlow Implementation

- Efficient Estimation of Word Representations in Vector Space (https://arxiv.org/abs/1301.3781)